Flaggerにずっと興味があるものの中々触れてなかった。

が、そろそろなにかしないと感とやっと時間が取れてきたということで触り始めた。

Flaggerすごいかっこいい。

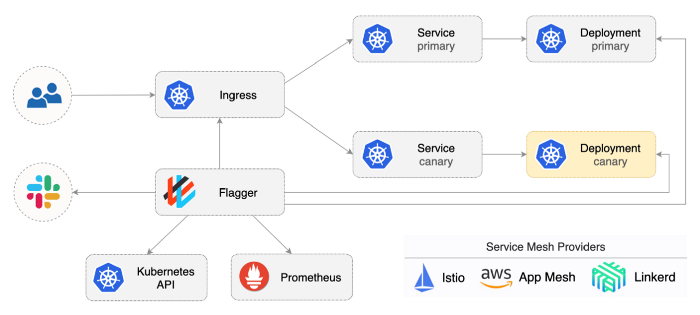

Flaggerとは

- Istio の VirtualSerice うまくつかって、Progressive Delivery を実現できる模様。AWS App Mesh や Linkerd とかにも対応してる。

- Progressive Delivery は Canary Relase をしながら、Cnary Analysis を自動でして、Fail であれば Roll Back するデプロイ方法。

- Slack 通知も簡単

Flagger のセットアップ

Helm でデプロイ

prometheus, Slack の向き先とか設定。

$ helm upgrade -i flagger flagger/flagger \ —namespace=istio-system \ —set metricsServer=http://prometheus.istio-system:9090 \ —set slack.url=https://hooks.slack.com/services/YOUR-WEBHOOK-ID \ —set slack.channel=general \ —set slack.user=flagger

Helm 設定値確認

$ helm get values flagger USER-SUPPLIED VALUES: metricsServer: http://prometheus.monitoring:9090 slack: channel: '@kashinoki38' url: https://hooks.slack.com/services/YOUR-WEBHOOK-ID user: flagger

サンプルアプリ、podinfo デプロイ

testnamespace にpodinfoを kustomize でデプロイ。

$ kubectl create ns test $ kubectl label namespace test istio-injection=enabled $ kubectl apply -k github.com/stefanprodan/podinfo//kustomize

kustomize の結果、Deployment, Service, HPA ができる。

$ kg all NAME READY STATUS RESTARTS AGE pod/podinfo-7fdfdbd99c-4vc6n 2/2 Running 0 45s pod/podinfo-7fdfdbd99c-nfsg7 2/2 Running 0 52s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/podinfo ClusterIP 10.179.15.136 <none> 9898/TCP,9999/TCP 4m47s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/podinfo 2/2 2 2 53s NAME DESIRED CURRENT READY AGE replicaset.apps/podinfo-7fdfdbd99c 2 2 2 54s NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE horizontalpodautoscaler.autoscaling/podinfo Deployment/podinfo <unknown>/99% 2 4 2 6d18h

Flagger の Canary 設定 canary.yaml

Flagger の Canary 設定ファイルcanary.yaml

以下を設定

- 対象となる deployment (

.spec.targetRef) - Canary analysis の条件(

.spe.analysis)- リトライ間隔(

interval), rollback するまでののリトライ回数(threshold), トランザクションを割り振る

- リトライ間隔(

canaly.yaml

apiVersion: flagger.app/v1beta1 kind: Canary metadata: name: podinfo namespace: test spec: # deployment reference targetRef: apiVersion: apps/v1 kind: Deployment name: podinfo # the maximum time in seconds for the canary deployment # to make progress before it is rollback (default 600s) progressDeadlineSeconds: 60 # HPA reference (optional) autoscalerRef: apiVersion: autoscaling/v2beta2 kind: HorizontalPodAutoscaler name: podinfo service: # service port number port: 80 # container port number or name (optional) targetPort: 9898 # Istio gateways (optional) gateways: - ingressgateway.istio-system # Istio virtual service host names (optional) hosts: - "*" # - app.example.com # Istio traffic policy (optional) trafficPolicy: tls: # use ISTIO_MUTUAL when mTLS is enabled mode: DISABLE # Istio retry policy (optional) retries: attempts: 3 perTryTimeout: 1s retryOn: "gateway-error,connect-failure,refused-stream" analysis: # schedule interval (default 60s) interval: 1m # max number of failed metric checks before rollback threshold: 5 # max traffic percentage routed to canary # percentage (0-100) maxWeight: 50 # canary increment step # percentage (0-100) stepWeight: 10 metrics: - name: request-success-rate # minimum req success rate (non 5xx responses) # percentage (0-100) thresholdRange: min: 99 interval: 1m # - name: request-duration # # maximum req duration P99 # # milliseconds # thresholdRange: # max: 500 # interval: 30s # testing (optional) webhooks: - name: acceptance-test type: pre-rollout url: http://flagger-loadtester.test/ timeout: 30s metadata: type: bash cmd: "curl -sd 'test' http://podinfo-canary:9898/token | grep token" - name: load-test url: http://flagger-loadtester.test/ timeout: 5s metadata: cmd: "hey -z 1m -q 10 -c 2 http://podinfo-canary.test:9898/"

$ kubectl apply -f canary.yaml

以下のように Service と Deployment と VirtualService が作成される。

- Services

- podinfo

- podinfo-primary ★new

- podinfo-canary ★new

- Deployment

- podinfo

- podinfo-canary ★new

- VirtualService

- podinfo ★new

podinfo の replicas は 0 になっている。

$ kg all NAME READY STATUS RESTARTS AGE pod/podinfo-primary-78cc86fbdb-csljl 2/2 Running 0 110s pod/podinfo-primary-78cc86fbdb-wkzqj 2/2 Running 0 110s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/podinfo ClusterIP 10.179.15.136 <none> 9898/TCP 16m service/podinfo-canary ClusterIP 10.179.1.5 <none> 9898/TCP 111s service/podinfo-primary ClusterIP 10.179.4.82 <none> 9898/TCP 111s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/podinfo 0/0 0 0 12m deployment.apps/podinfo-primary 2/2 2 2 112s NAME DESIRED CURRENT READY AGE replicaset.apps/podinfo-7fdfdbd99c 0 0 0 12m replicaset.apps/podinfo-primary-78cc86fbdb 2 2 2 112s NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE horizontalpodautoscaler.autoscaling/podinfo Deployment/podinfo <unknown>/99% 2 4 0 6d18h horizontalpodautoscaler.autoscaling/podinfo-primary Deployment/podinfo-primary 4%/99% 2 4 2 82s NAME STATUS WEIGHT LASTTRANSITIONTIME canary.flagger.app/podinfo Initialized 0 2021-01-21T20:28:57Z

service の定義

$ kg svc -o yaml apiVersion: v1 items: - apiVersion: v1 kind: Service metadata: annotations: cloud.google.com/neg: '{"ingress":true}' kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"name":"podinfo","namespace":"test"},"spec":{"ports":[{"name":"http","port":9898,"protocol":"TCP","targetPort":"http"},{"name":"grpc","port":9999,"protocol":"TCP","targetPort":"grpc"}],"selector":{"app":"podinfo"},"type":"ClusterIP"}} creationTimestamp: "2021-01-21T20:13:56Z" name: podinfo namespace: test resourceVersion: "133574186" selfLink: /api/v1/namespaces/test/services/podinfo uid: cd719bd9-9ccb-4ccd-948b-9272f3e8789e spec: clusterIP: 10.179.15.136 ports: - name: http port: 9898 protocol: TCP targetPort: 9898 selector: app: podinfo-primary sessionAffinity: None type: ClusterIP status: loadBalancer: {} - apiVersion: v1 kind: Service metadata: creationTimestamp: "2021-01-21T20:28:27Z" labels: app: podinfo-canary name: podinfo-canary namespace: test ownerReferences: - apiVersion: flagger.app/v1beta1 blockOwnerDeletion: true controller: true kind: Canary name: podinfo uid: ed4f3184-4789-49bd-8eec-5c62bdda3682 resourceVersion: "133574170" selfLink: /api/v1/namespaces/test/services/podinfo-canary uid: c318abd8-6450-4d1e-8637-cd780525798e spec: clusterIP: 10.179.1.5 ports: - name: http port: 9898 protocol: TCP targetPort: 9898 selector: app: podinfo sessionAffinity: None type: ClusterIP status: loadBalancer: {} - apiVersion: v1 kind: Service metadata: creationTimestamp: "2021-01-21T20:28:27Z" labels: app: podinfo-primary name: podinfo-primary namespace: test ownerReferences: - apiVersion: flagger.app/v1beta1 blockOwnerDeletion: true controller: true kind: Canary name: podinfo uid: ed4f3184-4789-49bd-8eec-5c62bdda3682 resourceVersion: "133574171" selfLink: /api/v1/namespaces/test/services/podinfo-primary uid: 10a8651c-4465-42d8-8f6b-386721fd5381 spec: clusterIP: 10.179.4.82 ports: - name: http port: 9898 protocol: TCP targetPort: 9898 selector: app: podinfo-primary sessionAffinity: None type: ClusterIP status: loadBalancer: {} kind: List metadata: resourceVersion: "" selfLink: ""

virtual service の定義

podinfo-primaryに 100%流す設定。

$ kg vs -o yaml apiVersion: v1 items: - apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: creationTimestamp: "2021-01-21T20:42:27Z" generation: 1 name: podinfo namespace: test ownerReferences: - apiVersion: flagger.app/v1beta1 blockOwnerDeletion: true controller: true kind: Canary name: podinfo uid: 1fd42bd1-e477-4af8-8120-fa54d9be0f86 resourceVersion: "133580636" selfLink: /apis/networking.istio.io/v1alpha3/namespaces/test/virtualservices/podinfo uid: 00cfc642-ccfa-4933-b3be-90a2fdcc7d00 spec: gateways: - ingressgateway.istio-system.svc.cluster.local hosts: - app.istio.example.com - podinfo http: - route: - destination: host: podinfo-primary weight: 100 - destination: host: podinfo-canary weight: 0 kind: List metadata: resourceVersion: "" selfLink: ""

service と Deployment のマッピング。

graph LR; a([service/podinfo])-->deploy/podinfo-primary; b([service/podinfo-canary])-->deploy/podinfo; c([service/podinfo-primary])-->deploy/podinfo-primary; deploy/podinfo-canary

tester の作成

Flagger の Webhook で負荷試験を実施するコンポーネント。

$ kubectl apply -k https://github.com/fluxcd/flagger//kustomize/tester?ref=main service/flagger-loadtester created deployment.apps/flagger-loadtester created

webhook 設定

受け入れテストとしてhttp://podinfo-canary:9898/tokenへの curl

load-test としてhttp://podinfo-canary:9898/への hey

webhooks: - name: acceptance-test type: pre-rollout url: http://flagger-loadtester.test/ timeout: 30s metadata: type: bash cmd: "curl -sd 'test' http://podinfo-canary:9898/token | grep token" - name: load-test url: http://flagger-loadtester.test/ timeout: 5s metadata: cmd: "hey -z 1m -q 10 -c 2 http://podinfo-canary.test:9898/"

デプロイ:成功 ver

webhooks の定義にしたがって、acceptance-test と load-test が走る

image のバージョンを変えてみる。

$ kubectl -n test set image deployment/podinfo podinfod=stefanprodan/podinfo:3.1.1 $ watch "kubectl describe canary podinfo -n test|tail" Every 2.0s: kubectl describe canary podinfo -n test|tail DESKTOP-NTAMOVN: Mon Jan 25 00:42:58 2021 Normal Synced 8m22s (x5 over 6h45m) flagger New revision detected! Scaling up podinfo.test ★ターゲットの変更を検知 Normal Synced 7m22s (x5 over 6h44m) flagger Advance podinfo.test canary weight 10 ★virtualserviceで10%をpodinfoに流す(1分ごとに10%増加) Normal Synced 7m22s (x5 over 6h44m) flagger Pre-rollout check acceptance-test passed ★受け入れテストクリア Normal Synced 7m22s (x5 over 6h44m) flagger Starting canary analysis for podinfo.test Normal Synced 6m22s flagger Advance podinfo.test canary weight 20 Normal Synced 5m22s flagger Advance podinfo.test canary weight 30 Normal Synced 4m22s flagger Advance podinfo.test canary weight 40 Normal Synced 3m22s flagger Advance podinfo.test canary weight 50 Normal Synced 2m22s flagger Copying podinfo.test template spec to podinfo-pr imary.test Normal Synced 22s (x2 over 82s) flagger (combined from similar events): Promotion comple ted! Scaling down podinfo.test

検証用に podinfo が立ち上がる。

Every 2.0s: kubectl get po DESKTOP-NTAMOVN: Mon Jan 25 15:39:58 2021 NAME READY STATUS RESTARTS AGE flagger-loadtester-597d7756b5-xhcdq 2/2 Running 0 21h podinfo-7b96774c87-d5n4s 2/2 Running 0 19s ★新しく起動 podinfo-7b96774c87-prnlv 1/2 Running 0 14s ★新しく起動 podinfo-primary-b49dbb9dc-8jgq4 2/2 Running 0 14h podinfo-primary-b49dbb9dc-jslz4 2/2 Running 0 14h

Canary Analysis が通ってpodinfo-primary入れ替え

Every 2.0s: kubectl get po DESKTOP-NTAMOVN: Mon Jan 25 15:56:57 2021 NAME READY STATUS RESTARTS AGE flagger-loadtester-597d7756b5-xhcdq 2/2 Running 0 22h podinfo-5788b6c694-f954v 2/2 Running 0 6m8s podinfo-5788b6c694-kjzv8 2/2 Running 0 6m18s podinfo-primary-67484dd774-lkxdf 2/2 Running 0 18s podinfo-primary-67484dd774-xvnbj 0/2 PodInitializing 0 6s podinfo-primary-b49dbb9dc-8jgq4 2/2 Running 0 15h podinfo-primary-b49dbb9dc-jslz4 2/2 Terminating 0 15h

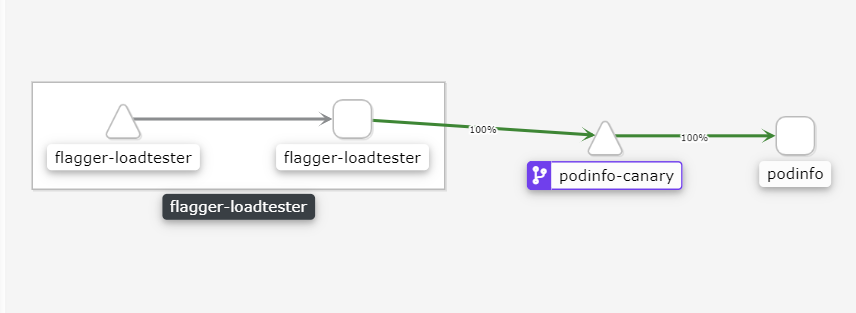

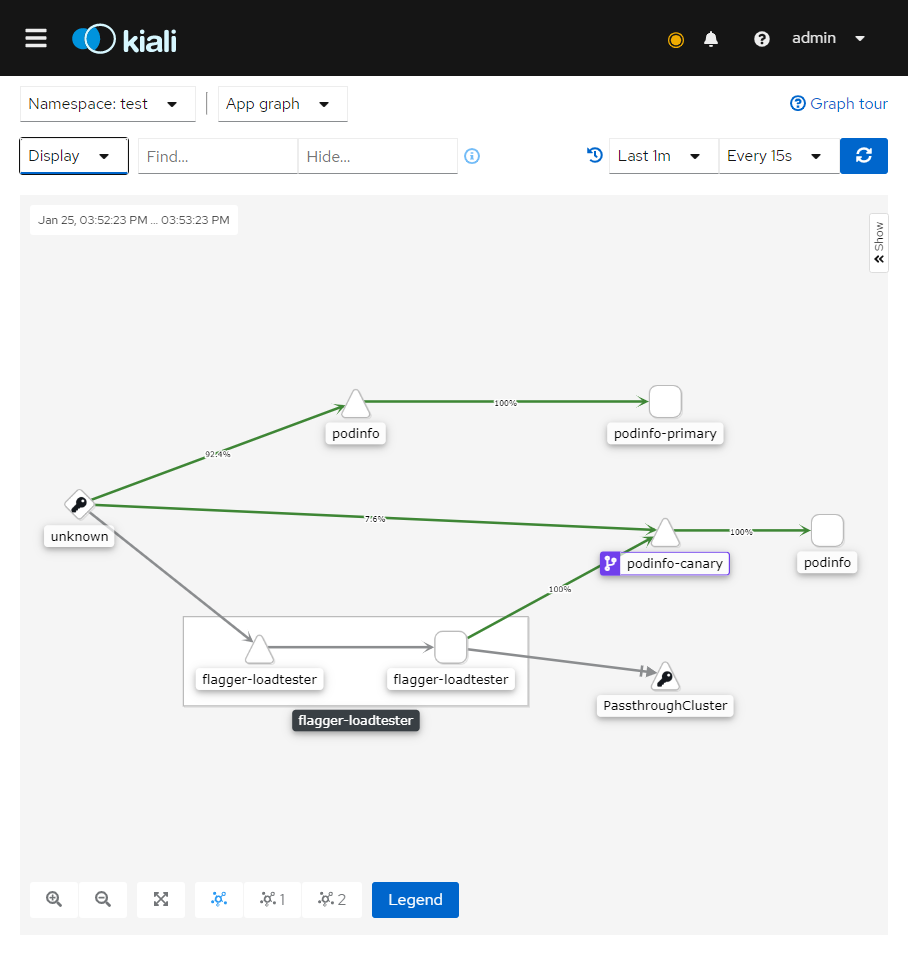

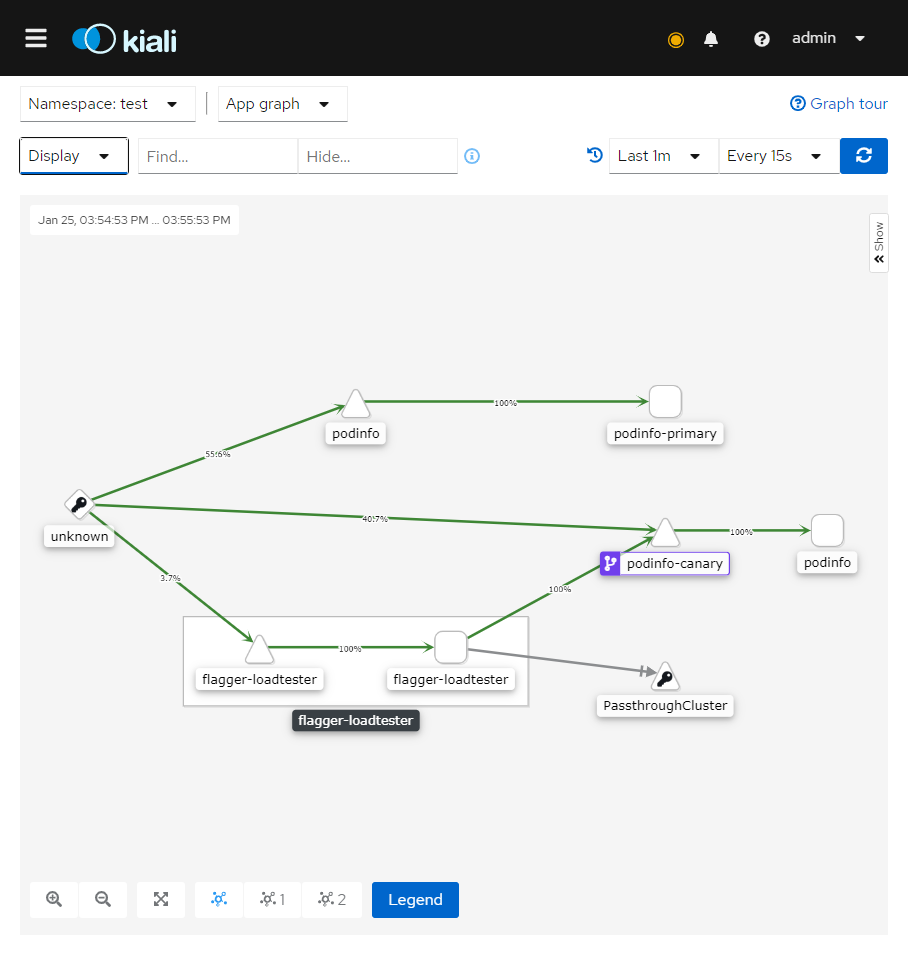

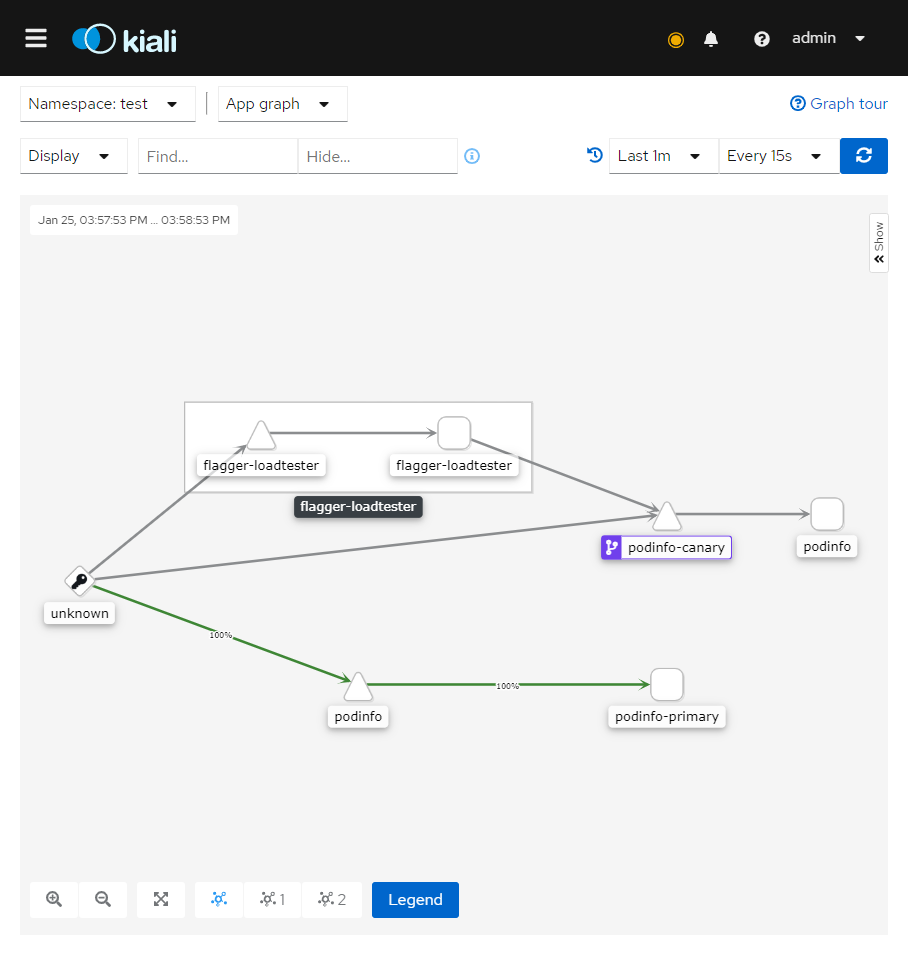

Kiali

load-testはIngressGateway通さない設定なので、直接podinfoに100%かけてる。

IngressGatewayから来ている負荷については、canary weightの増加にしたがって少しずつpodinfo-canaryに流れる比率が上がっていくのが見える。

確認し忘れていたが、おそらくVirtual Serviceのweightが変化していっているはず。

↓

Canary Analysisが完了すると、podinfo-primaryが更新されて100%流れる。

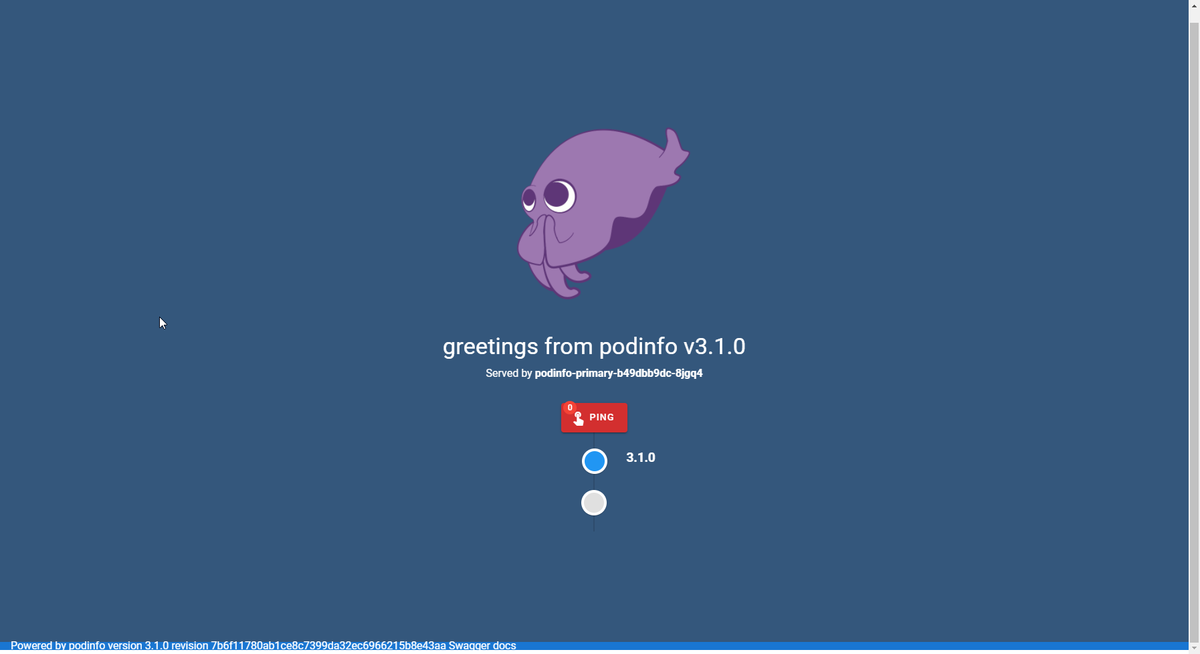

画面

変更前

何回やっても v3.1.0

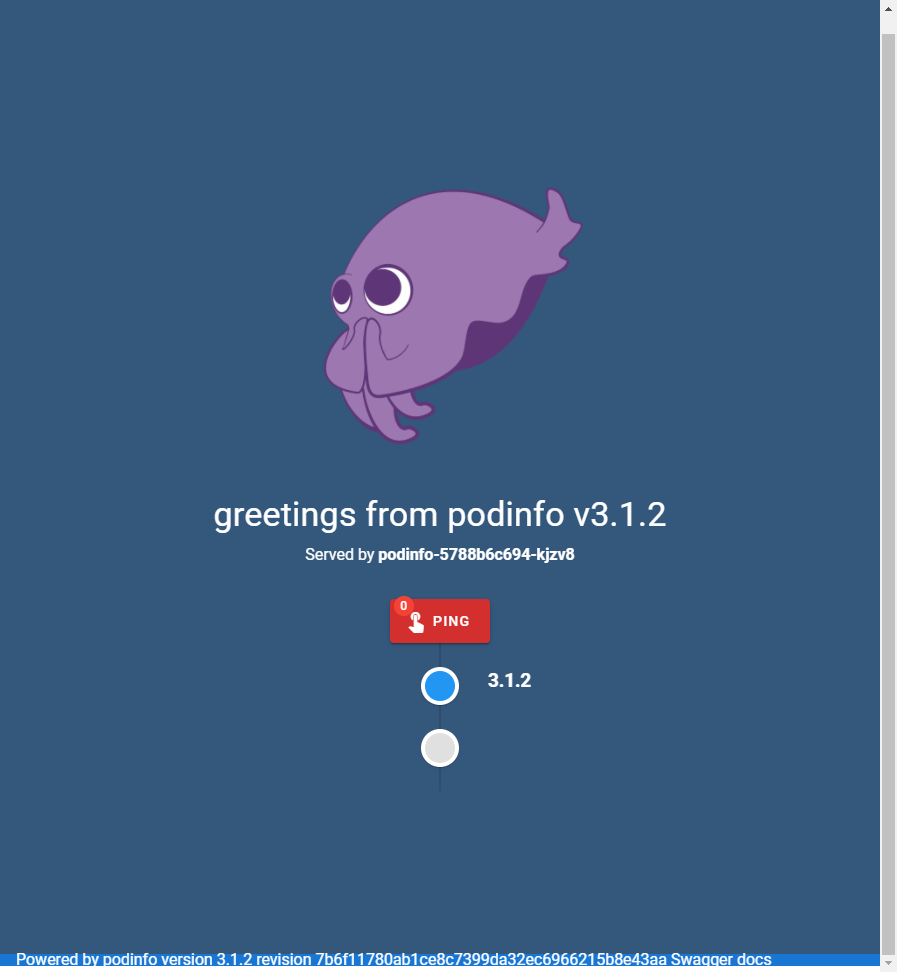

canary weight 10

何回かクリックすると v3.1.2 になる

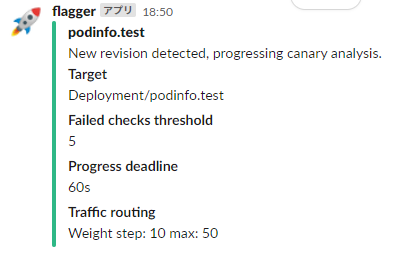

slack 連携

1. 開始

2. デプロイ完了

デプロイ:失敗 ver

acceptance-test 失敗

Every 2.0s: kubectl describe canary podinfo -n test|tail DESKTOP-NTAMOVN: Mon Jan 25 15:45:50 2021 Last Transition Time: 2021-01-25T06:45:38Z Phase: Failed Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Synced 6m15s (x6 over 21h) flagger New revision detected! Scaling up podinfo.test Normal Synced 75s (x10 over 21h) flagger Starting canary analysis for podinfo.test Warning Synced 65s (x5 over 5m5s) flagger Halt podinfo.test advancement pre-rollout check acceptance-test fail ★curlが通らず失敗 ed command curl -sd 'test' http://podinfo-canary:9898/token | grep token failed: : exit status 1 Warning Synced 15s (x5 over 21h) flagger Rolling back podinfo.test failed checks threshold reached 5 Warning Synced 15s (x5 over 21h) flagger Canary failed! Scaling down podinfo.test

load-test 失敗

request-duration がうまく取れないっぽく、canary.yamlの.spec.analysis.metricsがそのままだと load-test 中に失敗する。

(metrics の request-duration をコメントアウトすると動く。Istio version によるメトリクス名変更のため?)

metrics: - name: request-success-rate # minimum req success rate (non 5xx responses) # percentage (0-100) thresholdRange: min: 99 interval: 1m # - name: request-duration # # maximum req duration P99 # # milliseconds # thresholdRange: # max: 500 # interval: 30s

$ kubectl -n test set image deployment/podinfo podinfod=stefanprodan/podinfo:3.1.1

$ watch "kubectl describe canary podinfo -n test|tail"

Every 2.0s: kubectl -n test describe canary/podinfo|tail -n 20 DESKTOP-NTAMOVN: Sun Jan 24 18:05:01 2021

Last Update Time: 2021-01-24T09:04:37Z

Message: Canary analysis failed, Deployment scaled to zero.

Reason: Failed

Status: False

Type: Promoted

Failed Checks: 0

Iterations: 0

Last Applied Spec: 5f8fd4f546

Last Transition Time: 2021-01-24T09:04:37Z

Phase: Failed

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Synced 7m30s flagger New revision detected! Scaling up podinfo.test

Normal Synced 6m30s flagger Starting canary analysis for podinfo.test

Normal Synced 6m30s flagger Pre-rollout check acceptance-test passed

Normal Synced 6m30s flagger Advance podinfo.test canary weight 10

Warning Synced 90s (x5 over 5m30s) flagger Halt advancement no values found for istio metric request-duration probably podinfo.test is not receiving traffic ★定義したmtricsであるrequest-durationが取れないので失敗

Warning Synced 30s flagger Rolling back podinfo.test failed checks threshold reached 5

Warning Synced 30s flagger Canary failed! Scaling down podinfo.test

request-duration はデフォルトで以下のような PromQL。

https://docs.flagger.app/faq#metrics

analysis: metrics: - name: request-duration # maximum req duration P99 # milliseconds thresholdRange: max: 500 interval: 1m

↓

histogram_quantile(0.99,

sum(

irate(

istio_request_duration_seconds_bucket{

reporter="destination",

destination_workload=~"$workload",

destination_workload_namespace=~"$namespace"

}[$interval]

)

) by (le)

)

所感

.spe.analysisをうまく定義すればリリース後の Canary Analysis だけじゃなく、リリース後の負荷試験もうまく自動化できそう- DB のスキーマが変わってしまう場合は適応外?

- Canary Analysis 周り

- load-test 周り

- 更新系の load-test 流したいと思うとどうすべきか

- load-test のシナリオやデータを最新の取ってくるようにしたい

- Slack 投稿内容とかカスタムしたい(グラフつけたり)

参考サイト

手順参考: medium.com

flagger 公式デモ: docs.flagger.app